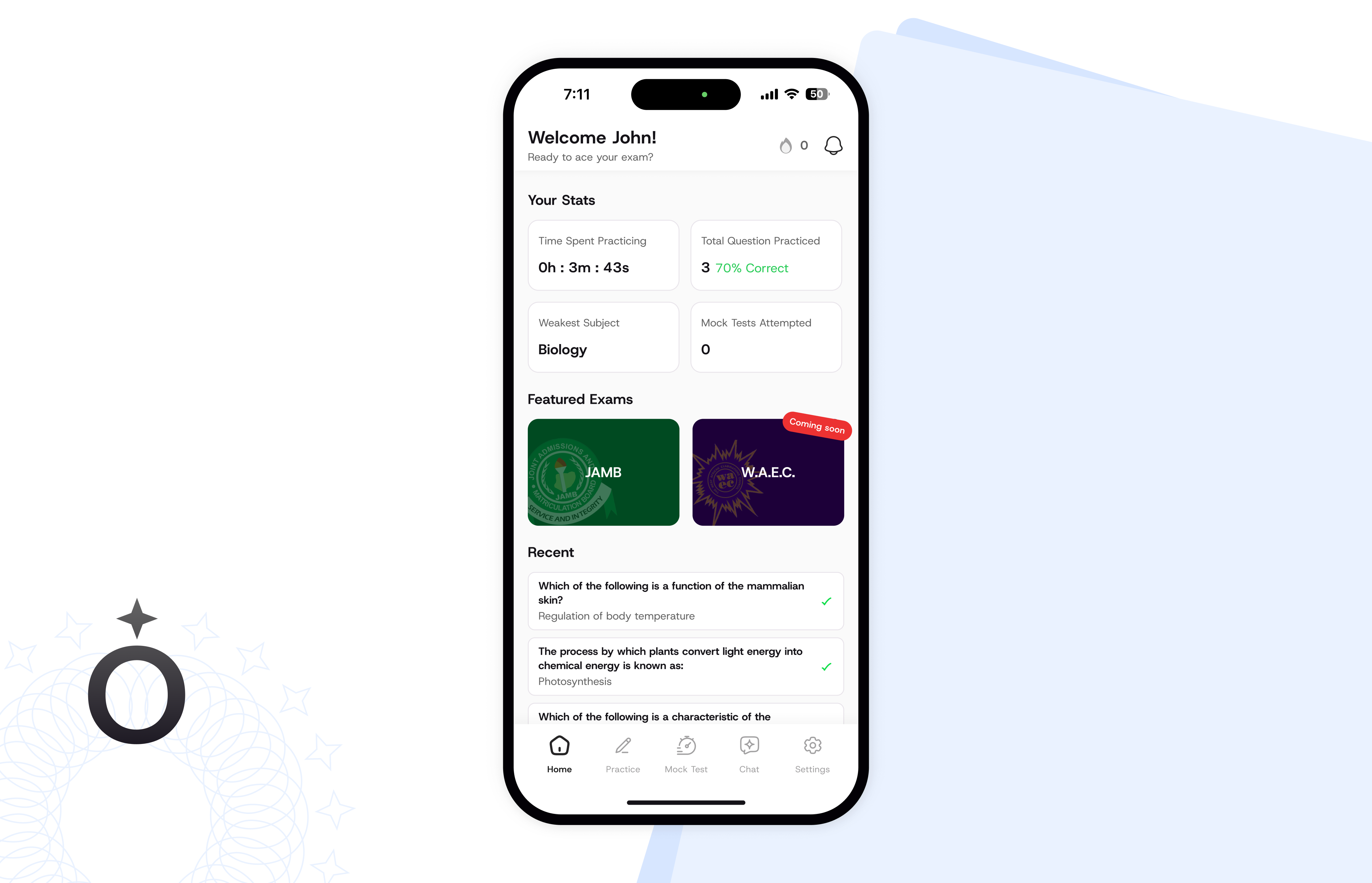

Juno AI is a mobile learning platform designed to help students prepare for two key standardized exams (JAMB and SSCE) required to gain admission into higher institutions. The app combines structured practice, intelligent feedback, and real exam simulation to build confidence and competence in test-takers. The app integrates AI tutoring, real exam simulation, and structured practice sessions to help students transition confidently from high school to higher education.

It bridges the gap between traditional study habits and adaptive digital learning with modern AI support, helping learners prepare more effectively and consistently for academic advancement right at the palm of their hands.

The goal was to design a product that not only simplifies preparation but also builds student discipline and readiness through intelligent support and progressive learning.

Preparing for national entrance exams is often stressful and isolating, especially for students without access to structured guidance or personalized feedback. Traditional prep methods like printed booklets or general online resources lack adaptability to each student’s pace and knowledge gaps.

The key challenge was to design a mobile experience that feels as reliable as a tutor, yet scalable to serve thousands of learners at once. The interface had to stay focused, distraction-free, and academically serious, while allowing AI to handle context-rich interactions without overwhelming users.

In today’s digital age, students face unprecedented levels of distraction from social media, entertainment apps, and constant notifications. Sustaining academic focus on a mobile platform required designing for motivation as much as for usability.

From interviews and early concept testing, several insights shaped the product direction:

These insights guided the design tone: clear, calm, and academically focused ensuring students could trust the app for sustained study.

While Juno is still in its closed testing phase, early feedback from selected user groups has validated several of its core design principles and feature hypotheses. The testing cohort: composed primarily of final-year secondary school students preparing for national entrance exams provided qualitative insights that continue to shape product refinement before public release.

Key Observations from Closed Testing:

The closed testing phase continues to inform refinements around personalized progress tracking, question difficulty calibration, and AI explanation depth. Future updates will focus on expanding the data visualization layer to help students see longitudinal growth and topic mastery before the app’s public launch.

The biggest takeaway was that students don’t just need access, they need guidance and reassurance. Thus, the inclusion of features like AI chat and streak tracking highlighted how digital tools can reduce anxiety and sustain focus, especially in an era of fragmented attention. It was essential to craft an interface that felt encouraging rather than punitive, maintaining a sense of progress even when performance fluctuated.

Looking ahead, We plan to expand the examination categories beyond the JAMB and SSCE to other vital certifications.